As more circuits get pushed into SoC (Systems on a Chip), the software that designs them becomes more and more important. Well, it’s been important for a while now. Important enough to be a multi-billion dollar industry. Biiiiig money.

Harry Gries is an EDA consultant with over 20 years in the electronics industry in various roles. He now consults for different companies and also writes a blog about his experience called “Harry…The ASIC Guy”. I love hearing about the different pieces of the electronics food chain and Harry was nice enough to take some time to talk to me about his work. Let’s see what he had to say…

CG: Could you please explain your educational and professional background and how you got to where you are today?

Harry The ASIC Guy (HTAG): My education began when I was raised by wolves in the Northern Territory of Manitoba. That prepared me well for a stint at MIT and USC, after which I was abducted by aliens for a fortnight. I then spent 7 years as a digital designer at TRW, 14 years at Synopsys as an AE, consultant, consulting and program manager. Synopsys and I parted ways and I have been doing independent consulting for 3 years now. A good friend of mine tricked me into writing a blog, so now I’m stuck doing that as well.

CG: What are some of the large changes you see from industry to industry? How does company culture vary from sector to sector?

HTAG: Let’s start with EDA, which did not really exist when the aliens dropped me off in 1985. There were a few companies who did polygon pushing tools and workstations and circuit complexity was in the 1000s of gates. Most large semiconductor companies had their own fabs and their own tools. Gate arrays and standard cell design was just getting started, but you had to use the vendor’s tools. Now, of course, almost all design tools are made by “EDA companies”.

As far as the differences between industries and sectors, I’m not sure that is such a big difference culturally. The company culture is set from the top. If you have Aart DeGeus as your founder, then you have a very technology focused culture. If you have Gerry Hsu (former Avant! CEO), then you have a culture of “win at all costs”.

CG: How hard was it for you to jump from being a designer to being in EDA? What kinds of skills would someone looking to get into the industry need?

HTAG: The biggest difference is clearly the “soft skills” of how to deal with people, especially customers, and understanding the sales process. For me it was a pretty easy transition because I had some aptitude and I really had a passion for evangelizing the technology and helping others. If someone wanted to make that change, they would benefit from training and practice on communicating effectively, dealing with difficult people, presentation skills, influence skills, etc.

CG: With regards to the EDA industry, how much further ahead of the curve does the software end up being? For instance, is EDA working on software necessary to define the 13 nm node currently?

HTAG: As you know, the industry is never at a single point. Rather, there is a spectrum of design nodes being used with some small percentage at the most advanced nodes. Most EDA tools are being developed to address these new nodes, often in partnership with the semiconductor manufacturers developing the process node or the semiconductor designers planning to use them. The big EDA companies are really the only ones, for the most part, that have the resources to do this joint development. Whatever is the newest node being developed, some EDA company is probably involved.

CG: You have written about the nature of the industry and how there being few players affecting the nature of the system. What kinds of limitations do you see in the industry due to the economies of scale (TSMC dominance, for instance)?

HTAG: Consolidation is a fact in any industry and a good thing in EDA. Think of it as natural selection whereby the good ideas get gobbled up and live on with more funding (and the innovators are rewarded); the bad ideas die out. Most small EDA companies would want to be bought out as their “exit”. At the same time, there are some “lifestyle companies” also in EDA where the founders are happy just making a good living developing their tools and selling them without having to “sell out” to a larger company. For all these small companies, the cost of sales is a key factor because they cannot afford to have a larger world-wide sales direct force as the larger EDA companies have. That’s where technologies like Xuropa come into play, that enable these smaller companies to do more with less and be global without hiring a global sales force.

CG: What drives the requirements placed upon new technology in the EDA space? How are the products developed? Are there a lot of interactions with specific big name designers (i.e. Intel) or does it shade more to the manufacturers (TSMC)?

HTAG: In fact, a handful of key customers usually drive the requirements, especially for small companies. When I was at Synopsys, Intel’s needs was the driver for a number of years. Basically, the larger the customer, the greater the clout. Other customers factor in, but not as much. The most advanced physical design capabilities of the tools are often a collaboration between the EDA company and the semiconductor manufacturers (e.g. TSMC) and the also the designers (e.g. Qualcomm). Increasingly, EDA tools are focusing on the higher-levels and you are seeing partnerships with software companies, e.g. Cadence partnering with Wind River.

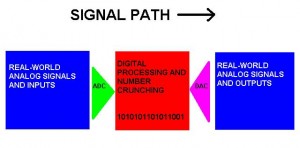

CG: A good chunk of chip design is written and validated in code. This contrasts with much more low level design decisions in the past. In your opinion how has this changed the industry and has this been a good or bad thing? Where will this go in the future, specifically for analog?

HTAG: Being a digital designer and not an analog designer, it’s all written in code. Obviously, the productivity is much higher with the higher level of abstraction and the tools are able to optimize the design much better and faster than someone by hand. So it’s all good.

For analog, I am not as tied in but I know that similar attempts are being tried; they use the idea that analog circuits can be optimized based on a set of constraints. I think this is a good thing as long as the design works. Digital is easy in that regard, just meet timing and retain functionality and it’s pretty much correct. For analog there is so much more (jitter, noise margin, performance across process variation, CMRR, phase margin, etc, etc). I think it will be a while before analog designers trust optimization tools.

CG:It seems that the EDA industry has a strong showing of bloggers as compared to system level board engineers or even chip designers. What kinds of benefits have you seen in your own industry from having a network of bloggers and what about EDA promotes having so many people write about it?

HTAG: I think blogging is just one form of communication and since EDA people are already communicators (with their customers), they have felt more comfortable blogging than design engineers. Many of the EDA bloggers are in marketing types of positions at their companies or are independent consultants like me, so the objective is to start a conversation with customers that would be difficult to have in other ways. A result is that this builds credibility for themselves that then accrues to their company. I think there has also been a ton of sharing and learning due to these blogs and that has benefited the entire industry. On a personal level, I know so many more people due to the blog and that network is of great value.

CG: How has your career changed since moving back out of the EDA space and into consulting? What kind of work have you been doing lately? How has your experience helped you in consulting?

HTAG: It is interesting to have been on the EDA side and then move back into the design side. Whenever I communicate with an EDA company, whether a presentation or a tool evaluation or any conversation, I can easily put myself in their shoes and know where they are coming from. On the one hand, I can spot clearly manipulative practices such as spreading FUD (fear, uncertainty, and doubt) about a competitor and I can read between the lines to gain insights that others would miss. On the other hand, I also understand the legitimate reasons that EDA companies make certain decisions, such as limiting the length of tool evaluations, qualifying an opportunity, etc.

Most recently I’ve been working on some new technology development at a new process node. It’s been interesting because I’ve been able to dig deeper into how digital libraries are developed, characterized, and tested and I’ve also learned a lot more about the mixed-signal and analog world and also the semiconductor process.

Many thanks to Harry for taking the time to answer some questions about his industry and how he views the electronics world. If you have any questions, please leave them in the comments or pop over to Harry’s main site and leave a comment there.